AI-based chatbots like ChatGPT, Bard will not be misused, researchers find solution

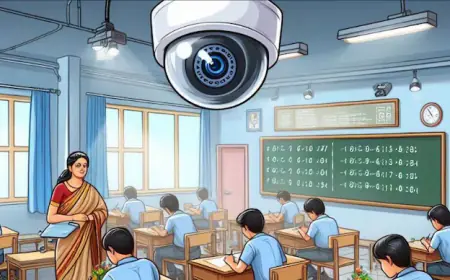

Misuse Of AI Based Chatbot: ChatGPT model was introduced for internet users last year. Models like the ChatGPT were introduced one after the other shortly after. A section of society is worried about the misuse of these chatbots. On the other hand, researchers claim that new solutions have been found to prevent misuse of chatbots.

Last year, there was a big revolution in the Internet world when America's AI startup company OpenAI introduced a special type of chatbot model. Based on AI technology, this chatbot was a human-like conversational model. By understanding the language of humans and answering in their own language, ChatGPT started making the work of every user easier.

Soon, many other models like ChatGPT started appearing for the users. Over time, the user got the option of many other models like ChatGPT, Bing, and Bard.

These chatbot models are making the user's work easy, but a section of society started worrying about their misuse. In this series, researchers have introduced a new solution to prevent misuse of AI-based chatbots.

Researchers claim that they have found a way to bypass security information through chatbots made by Google, Anthropic, and OpenAI.

Ways are being explored to bypass the information provided by chatbots so as to ensure that users do not get the wrong information from these chatbots. Along with this, there should not be any danger related to security for the user.

Researchers from Carnegie Mellon University (Pittsburgh) and the Center for AI Safety (San Francisco) have made such a claim.

In their paper 'Universal and Transferable Attacks on Aligned Language Models', it is said that researchers can use jailbreaks. Jailbreaks have been developed to target popular AI models for open-source systems.